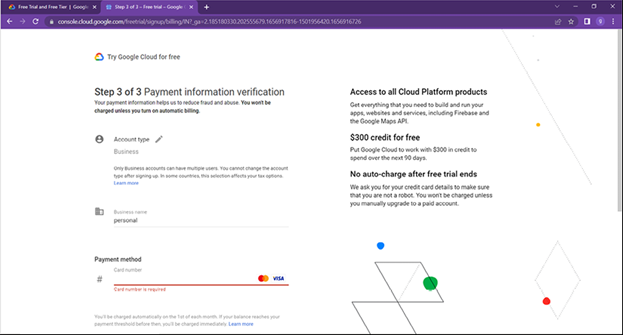

Deletion of bucket – Basics of Google Cloud Platform-1

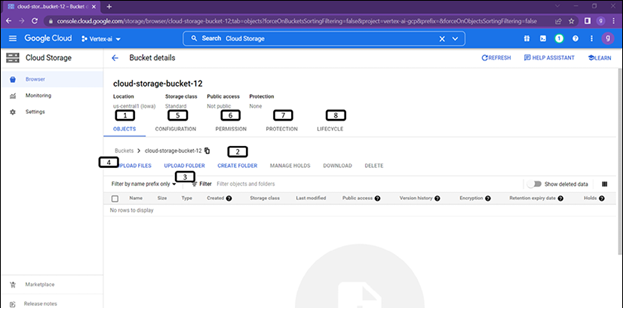

Follow steps described in Figure 1.28 to delete the bucket:

- Select the bucket which needs to be deleted.

- Click on DELETE, you will be prompted with a pop-up where user need to type delete.

The provision of compute services is an essential component of any cloud services offering. One of the most important products that Google provides to companies who want to host their applications on the cloud is called Google Compute Services. The umbrella term “computing” covers a wide variety of specialized services, configuration choices, and products that are all accessible for your consumption.

Users have access to the computing and hosting facilities provided by Google Cloud, where they may choose from the following available options:

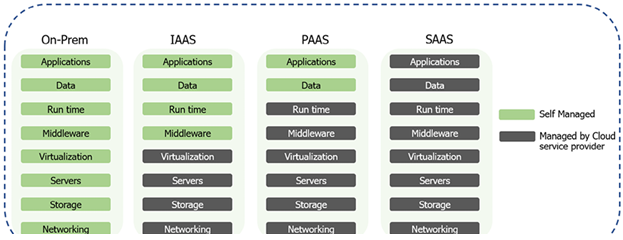

- Compute Engine: Users may construct and utilize virtual machines in the cloud as a server resource via the usage of Google Compute Engine, which is an IAAS platform. Users do not need to acquire or manage physical server hardware. Virtual Machines are servers that are hosted in the cloud, and Google’s data centers will be the locations where they are operated. They provide configurable choices for the central processing unit (CPU) and graphics processing unit (GPU), memory, and storage, in addition to several options for operating systems. Both a command line interface (CLI) and a web console are available for use when accessing virtual machines. When the compute engine is decommissioned, all the data will be erased. Therefore, the persistence of data may be ensured by using either traditional or solid-state drives.

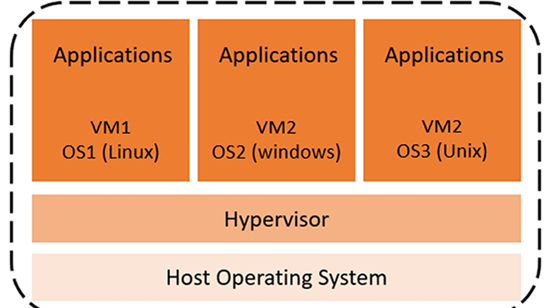

The Hypervisor is what runs Virtual Machines. The hypervisor is a piece of software that is installed on host operating systems and is responsible for the creation and operation of many virtual machines. The hypervisor on the host computer makes it possible to run many virtual machines by allowing them to share the host’s resources. Figure 1.29 shows the relationship between VMs, hypervisor and physical infrastructure.

Figure 1.29: Compute Engine

When it comes to pricing, stability, backups, scalability, and security, using Google Compute Engine is a good choice. It is a cost-efficient solution since consumers only need to pay for the amount of time that they utilize the resources. It allows live migration of VMs from one host to another, which helps to assure the system’s reliability. In addition to that, it has a backup mechanism that is reliable, built-in, and redundant. It does this by making reservations in order to assist and guarantee that applications have the capacity they need as they expand. Additional security will be provided by the compute engine for the applications running on it.

Compute Engine is a good solution for migrating established systems or fine-grained management of the operating system or other operational features.

- Kubernetes Engine: The Google Kubernetes Engine, sometimes known as GKE, is a container as a service of GCP. When installing containerized apps, clusters are managed via the open-source Kubernetes cluster management system. Kubernetes makes it possible for users to communicate with container clusters by providing the necessary capabilities. Both a control plane and a worker node are components of the Kubernetes system. Worker nodes are supplied to function as compute engines.

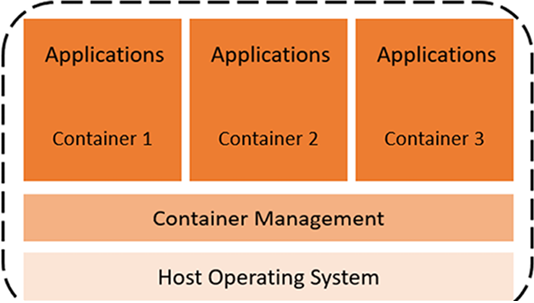

Figure 1.30: Kubernetes Engine

VM operates on Guest operating systems and hypervisors, however with Kubernetes Engine, compute resources are segregated in containers, and both the container manager and the Host operating system are responsible for their management.