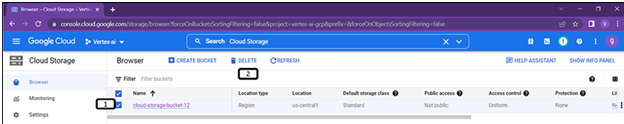

Deletion of bucket – Basics of Google Cloud Platform-2

Unique features include auto upgrades, node auto maintenance and auto scaling (Additional resources will be allotted by Kubernetes Engine in the event that the strain is placed on the applications).

- Advanced cluster administration functions, such as load balancing, node pools, logging and monitoring, will be made available to the users as a bonus.

- Compute Engine instance provides load balancing and distribution

- Within a cluster, node pools are used to identify subsets of nodes in order to provide greater flexibility.

- Logging and monitoring in conjunction with Cloud Monitoring so that you can see within your cluster.

- Google APP Engine: GCP’s provision of a platform as a service for the development and deployment of scalable apps is known as Google APP Engine. It is a kind of computing known as serverless computing, and gives the users the ability to execute their code in a computing environment that does not involve the setting up of virtual machines or Kubernetes clusters.

It is compatible with a variety of programming languages, including Java, Python, Node.js, and Go. Users have the option of developing their apps in any of the supported languages. The Google App Engine is equipped with a number of APIs and services that make it possible for developers to create applications that are powerful and packed with features. These characteristics are as follows:

- Access to the application log

- Blobstore, serve large data objects

Other important characteristics include a pay-as-you-go strategy, which means that you only pay for the resources employed. When there is a spike in the number of users using an application, the app engine will immediately increase the number of available resources, and vice versa.

Effective Diagnostic Services include Cloud Monitoring and Cloud Logging, which assist in running app scans to locate faults in the application. The app reporting document provides developers with assistance in immediately fixing any faults they find.

As a component of A/B testing, Traffic Splitting is a feature that allows app engine to automatically direct different versions of incoming traffic to various app iterations. Users are able to plan the subsequent increments depending on which version of the software functions the most effectively.

There are two distinct kinds of app engines:

- Standard APP Engine Applications are completely separate from the operating system of the server and any other applications that may be executing on that server at the same time. Along with the application code, there is no need for any operating system packages or other built software to be installed.

- The second kind is called Flexible APP Engine.

Docker containers are executed by users inside the App Engine environment. Additionally, it is necessary to install libraries or other third-party software in order to execute application code.

- Google Cloud Functions: The Google Cloud Function is a lightweight serverless computing solution that is used for event-driven processing. It is a function as a service (FAAS) product of GCP. Through the use of Cloud Functions, the time-consuming tasks of maintaining servers, setting software, upgrading frameworks, and patching operating systems are eliminated.

The user has to provide code for Cloud Functions to start running in response to an event since GCP completely manages both the software and the infrastructure. Cloud events are occurrences that take place inside a cloud computing environment, such as changes to the data stored in a database, additions of new files to a storage system, and even the construction of new virtual machines are all instances of these kinds of operations. A trigger is a declaration that you are interested in a certain event or set of events. Binding a function to a trigger allows user to capture and act on events. Event data is the data that is passed to Cloud Function when the event trigger results in function execution:

Figure 1.31: Cloud Function