Summary of Compute services of GCP – Basics of Google Cloud Platform

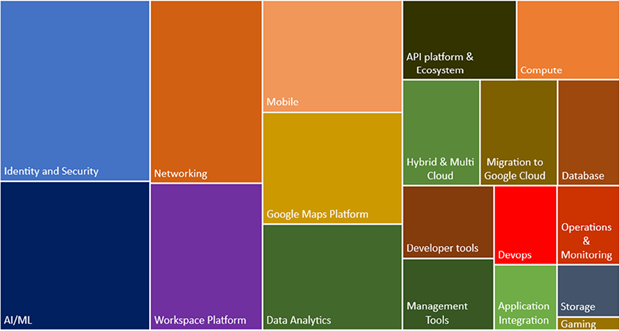

The summary of compute services of GCP can be seen in Figure 1.32:

Figure 1.32: Summary Compute Service

Creation of compute Engine (VM instances)

Compute Engine instances may run Google’s public Linux and Windows Server images and private custom images. Docker containers may also be deployed on Container-Optimized OS public image instances. Users’ needs to specify zone, OS, and machine configuration type (number of virtual CPUs and RAM) while creation. Each Compute Engine instance has a small OS-containing persistent boot drive and more storage can be added. VM instance default time is Coordinated Universal Time (UTC). Follow the steps below to create a virtual machine instance:

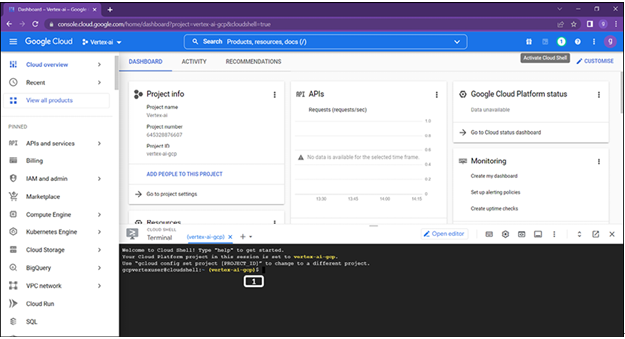

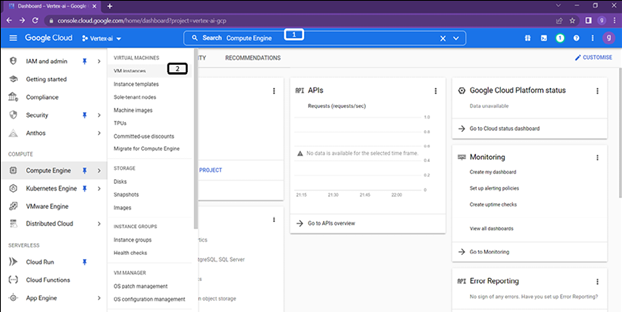

Step 1: Open compute engine:

Follow steps described in Figure 1.33 to open the compute engine of the platform:

- Users can type Compute Engine in the search box.

- Alternatively, users can navigate under Compute-to-Compute Engine | VM instances.

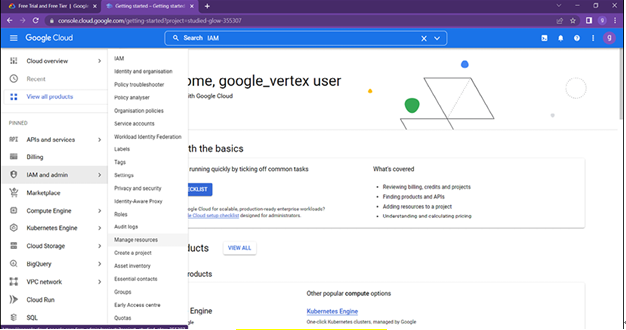

Step 2: Enable API for compute engine:

Users will be prompted to enable APIs when the resources are accessed for the first time as shown in Figure 1.34:

Figure 1.34: VM Creation API enablement

- If promoted for API enablement, then enable the API.

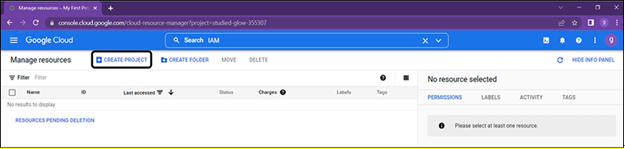

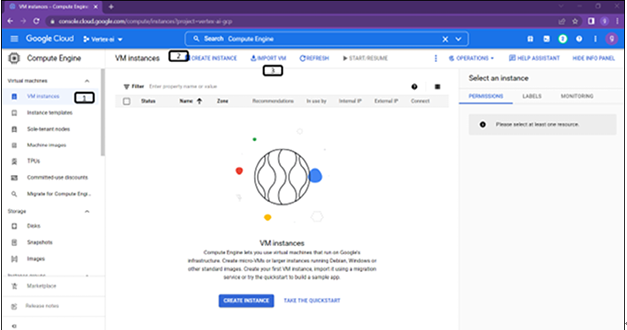

Step 3: VM instance selection:

Follow the steps described in Figure 1.35 to select the VM:

- Select VM instances.

- Click on CREATE INSTANCE to begin the creation process.

- GCP provides option of IMPORT VM for the migration of compute engine.

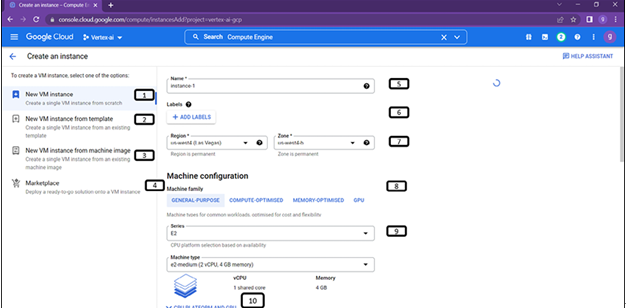

Step 4: VM instance creation:

Follow the steps described in Figure 1.36 to select location and machine type for the VM instance:

Figure 1.36: Location, machine selection for VM instance

- GCP provides multiple options for VM creation, first one for creating VM from scratch. We will create a New VM instance.

- VM’s can be created from template. Users can create a template and use it for future purpose.

- VM’s can be created from machine images, machine images is resource that stores configuration, metadata and other information to create a VM.

- GCP also provides option to choose deploy ready to go solution on to VM instance.

- Instance name has to be given by the user.

- Labels are optional, they provides key/value pairs to group VMs together.

- Select region and zone.

- GCP provides a wide range of options for users in machine configurations starting from GENERAL-PURPOSE, COMPUTE-OPTIMIZED, MEMORY-OPTIMIZED AND GPU based.

- Under each machine family configuration, GCP provides few series machines and machine type (to choose between CPU and memory).

- Users can choose CPU platform and GPU, if they want to select vCPUs to core ratio and visible core count.

Note: Try selecting different machine configurations and observe the variation in the price estimates.

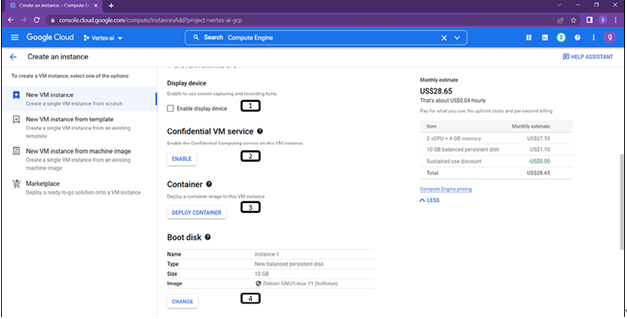

Step 5: VM instance disk and container selection:

Follow the steps described in Figure 1.37 to select boot disks for the instance:

Figure 1.37: Boot disk selection for VM instance

- Enable display tools, enable use of screen recording tools.

- Adds protection to your data in use by keeping memory of this VM encrypted.

- Deploy Container option is helpful when there is a need to deploy a container to VM instance by using a container-optimized OS image.

- Users can change the operating system, size and type the hard disk (HDD/SDD) by clicking on change.

Step 6: VM instance creation access settings:

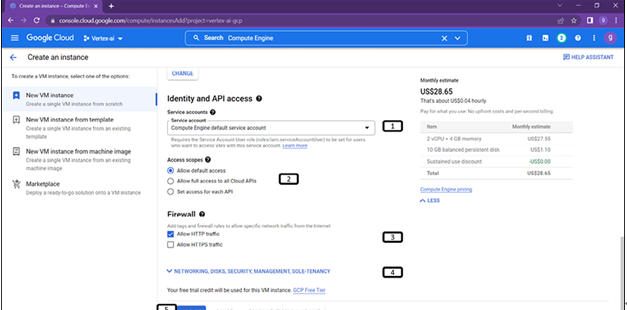

Follow the steps described in Figure 1.38 to configure access:

Figure 1.38: access control for VM instance

- Choose to set the default service account associated with the VM instance or users can create a service account and use the same for the compute engine.

- Users’ needs select how VM needs to be accessed, they can choose allow default access, or allow all the APIs or only few APIs to access the VM

- By default, all the internet traffic will be blocked, we need to enable them.

- Additional options such as disk protection, reservations, network tags, change host name, delete or retain boot disk when instance is deleted etc., are provided under networking, disks, security and management.

- Click on Create.