Command Line Interface – Basics of Google Cloud Platform-2

The primary distinction between Persistent disk and network file storage, as the name suggests, provides a disk storage over the network. This makes it possible to create systems with many parallel services that are able to read and write files from the same disc storage that is mounted across the network. These systems may be developed with the help of this capability.

The following are some examples of uses for Filestore:

- Majority of the on-premises applications needs a file system interface, Filestore makes it easy to migrate these kind of enterprise applications to the cloud

- It is used in the rendering of media in order to decrease latency.

- Cloud Storage: The service for storing objects that is provided by Google Cloud is known as Google Cloud Storage. It offers a number of extremely intriguing features that are pre-installed, such as object versioning and fine-grained permissions (either by object or bucket), both of which have the potential to simplify the development process and cut down on operational overhead. The Google Cloud Storage platform is used as the basis for a variety of different services.

Having this kind of storage is not at all usual in ordinary on-premises systems, which often have a capacity that is more restricted and connection that is both quick and exclusive. Object storage, on the other hand, has a very user-friendly interface in terms of how it works. In layman’s words, its value proposition is such that you are able to acquire and put whatever file you want using a REST API; in addition, this may extend forever with each object expanding up to the terabyte scale; and last, its value proposition is such that it is possible to store any amount of data. Buckets are the namespaces that are used in Cloud Storage to organize the many items that are stored there. Even while a bucket has the capacity to carry a number of items, each individual item will only ever belong to a single bucket.

The inexpensive cost of this storage type (cents per GB), along with its serverless approach and its ease of use, has contributed to its widespread adoption in cloud-native system architectures. The cloud service provider is then responsible for handling the laborious tasks of data replication, availability, integrity checks, capacity planning, and so on. APIs make it possible for applications to both save and retrieve items.

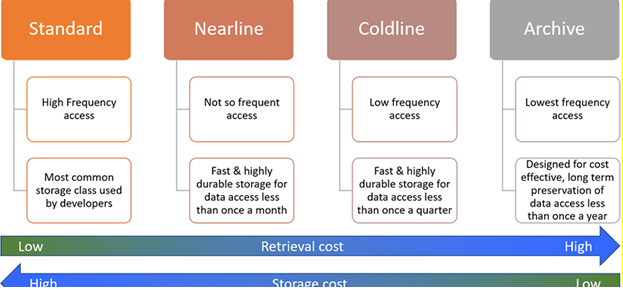

Based on factors like cost, availability, and frequency of access, cloud storage has four different storage classes. They are Standard, Nearline, Coldline, and Archive as shown in Figure 1.19:

- Standard class: This class of storage allows for high frequency access and is the type of storage that is most often used by software developers.

- Nearline storage class: This class is used for data that are not accessed very regularly, generally for data that are not accessed more than once a month. Generally speaking, nearline storage is used for data.

- Lowline storage class: This class is used for records that are normally accessed not more often than once every three months.

- Archive storage class: This class is used for data that is accessed with the lowest frequency and is often used for the long-term preservation of data.